My Software

Public Music Software

This is all the music software I've developed! NanoTone Synth is my microtonal tuner, and Celody Life is my cellular automata music generator. These were all created with the Seraphim Automata engine I developed in 2016.

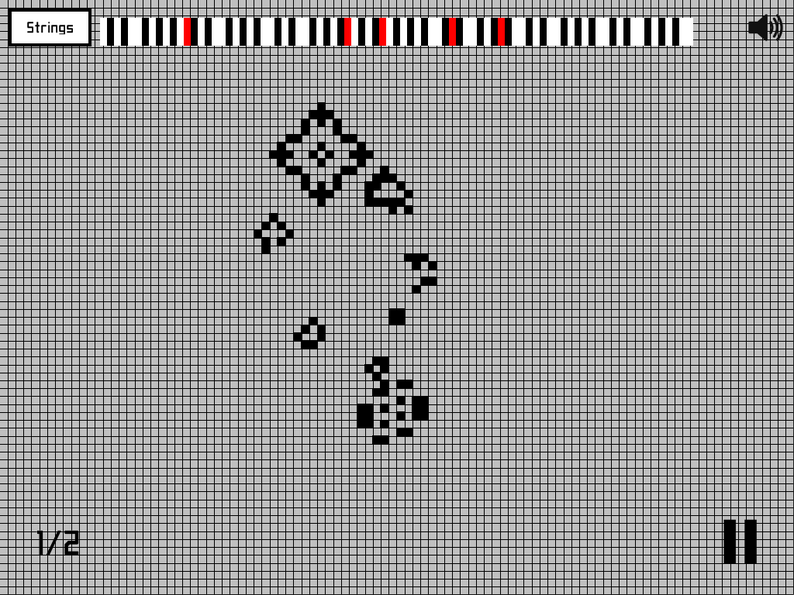

NanoTone Synth

Status: Complete

NanoTone Synth is a microtonal synthesizer and tuner. This is a useful tool for comparing the accuracy of different temperaments, and exploring unique harmonies. You can use the software in your desktop browser, or buy it on itch.io here.

Examine any temperament between 5 and 240-TET, and compare their stats to 12-TET.

Examine the accuracy of each harmony, up to 13-limit ratios.

Play music in any temperament, so you can hear the notes yourself.

You can also use this to tune your instruments. You can use this to tune a guitar in 24-TET to explore quarter tones, for example.

Use the mouse, touchscreen, or keyboard to play notes. Use the left and right arrow keys to change temperament. Use the up and down arrow keys to change the menu. Use the Z and Q rows to play music. Press L to view the help page.

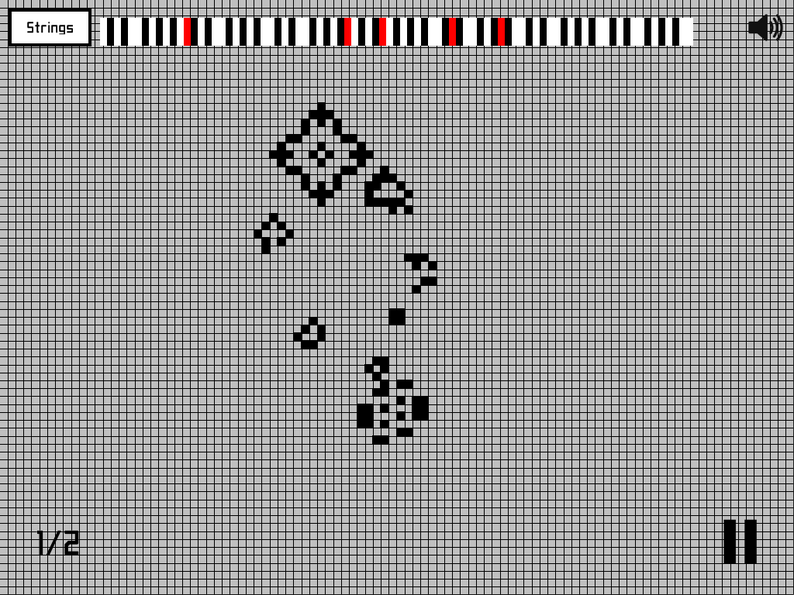

Celody Life

Status: Complete

Celody Life is generative music software. It uses cellular automata from Conway’s Game of Life to generate chords and melodies. You can buy the software on itch.io here.

Use the mouse to select cells. Press play to activate them. You can change instruments, tempo, musical scales, keys, and cellular automata rules.

Created one night by merging the keyboard code from my game Seraphim Automata with Cameron Penner’s code for the Game of Life. Hopefully this can inspire someone in the fields of music or game design.

Return to shop

Return to home